Aware: Tactile Navigation Platform for the visually impaired and blind

Final Bachelor Project

Innovation Research, circuit design, C++, Literature Review, User Centric Design, Prototyping

Introduction

Visually impaired and blind face different struggles throughout the day due to their loss of sight. Simple activities just as shopping, cooking, leaving the house and meeting with someone, avoiding obstacles etc. become challenging without the visual clues on which most of us rely every day. The project focuses on the navigation of the target users outside of the house.

In order to receive the information the VIP/B -visually impaired and blind- have the choice between the classic options of a cone, a guide dog and the smartphone. However, there are many other devices out there, which all offer different information and functionalities depending on the intentions of the user. This pushes them to collect a multitude of tools and apps to gather the missing information, which all require different interaction.

Instead of multiple devices, which all require a different way of interactions depending on the device, the user has a central platform with provides the essential data, which is important for navigation. What information is needed depends on the level of visual impairment of the user. Downside of the current interfaces is their heavy focus on visual representations of the system ranging all the way in the input and output solutions.

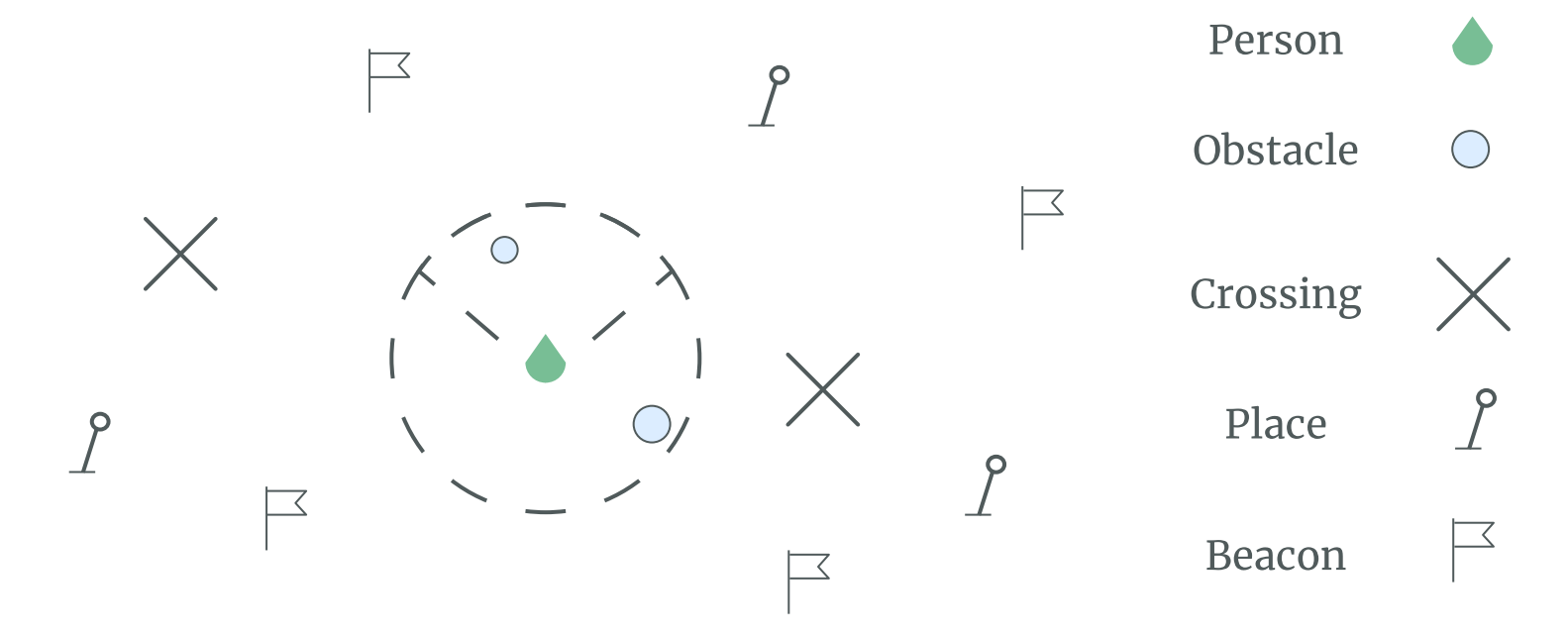

Someone, who is able to still see moving objects like bikes, might still be in need knowing if he/she is still on the sidewalk and not on the bike path. This data could then be acquired on demand and communicated. The device can adapt to the personal strategy of the user enhancing the independence of VIP/B in outdoor navigation.

The concept

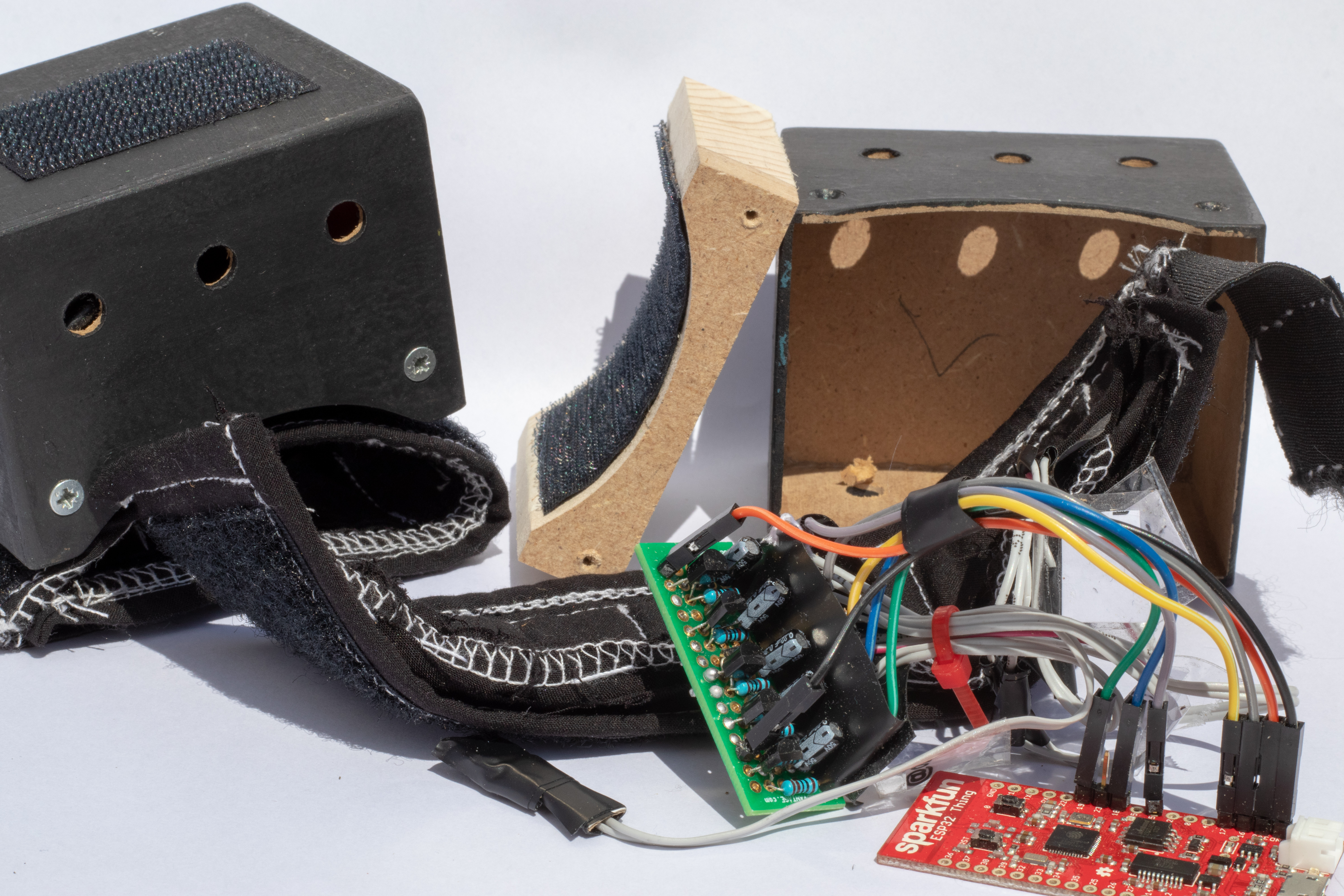

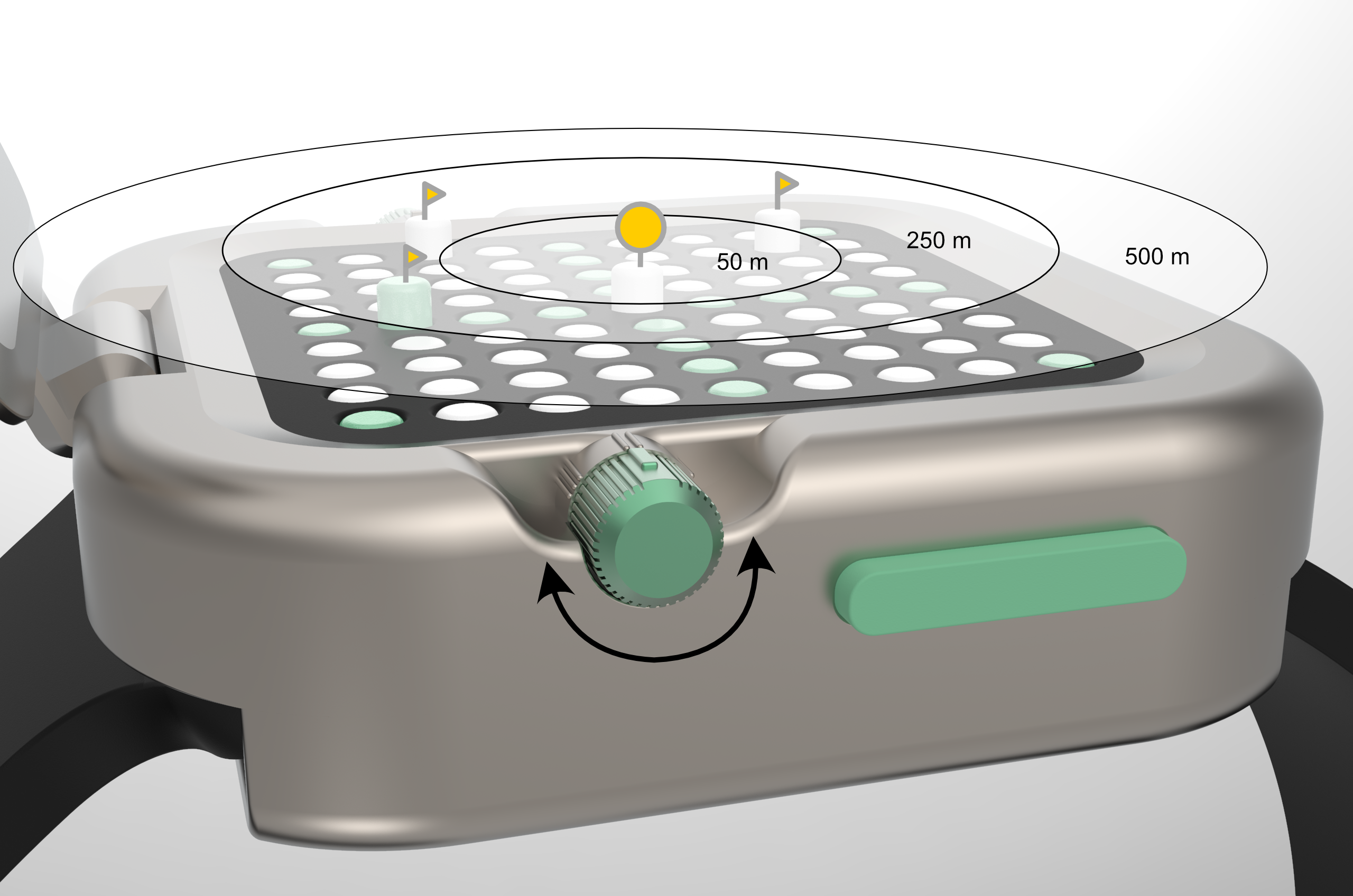

Center piece of the design is the tactile device and vibration patterns. Data is saved on the Smartphone and send to the device via Bluetooth. Through the smart- phone the user can acquire more data about locations, places, facilities and more. For this an extra app is required on the phone which grasps the data from various apps.

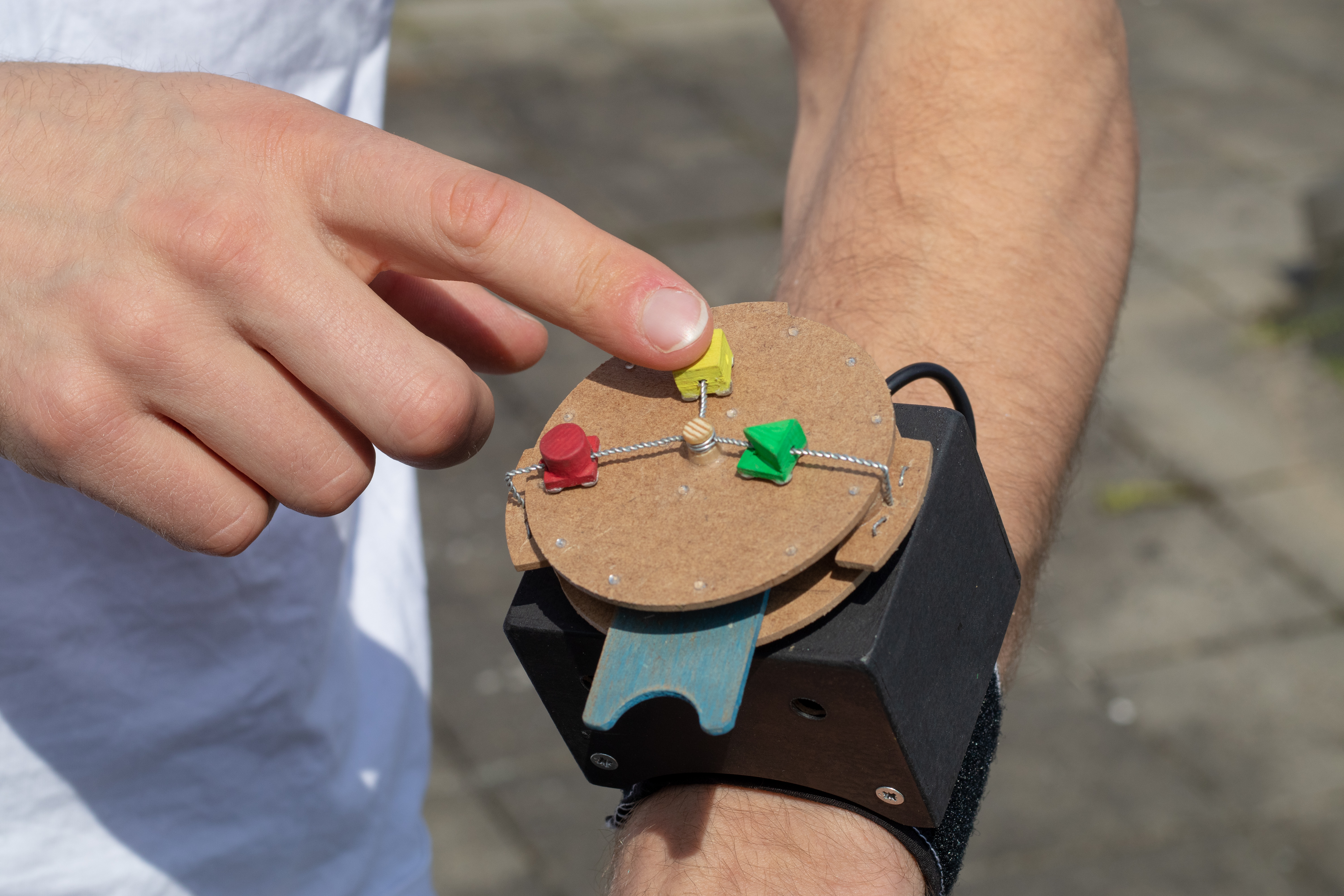

To activate the device the user needs to open the cover, which protects the interface from travel damage

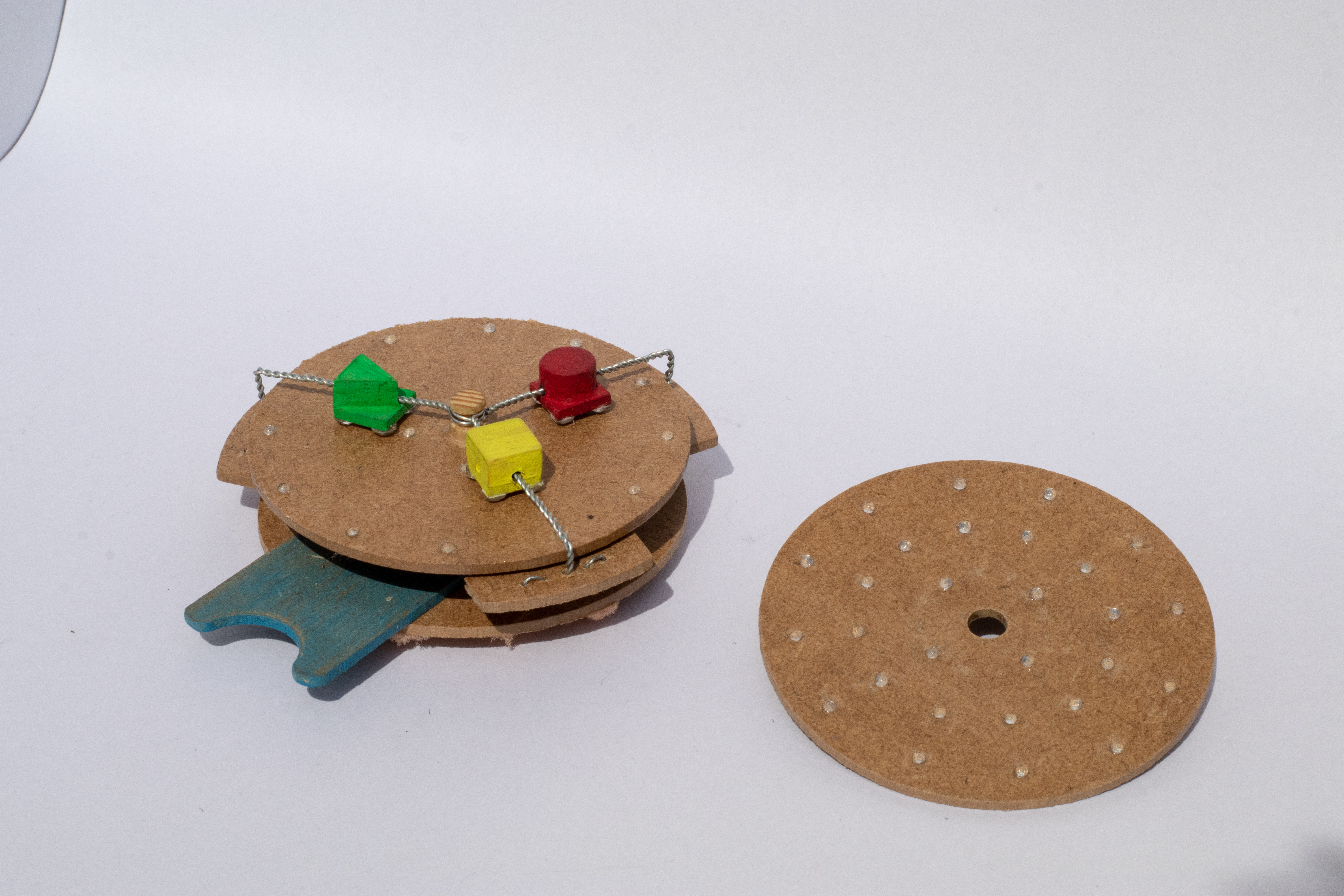

The interface consists of an 9x9 pin array with a colored structure for the visually impaired user group, who still have some vision remaining and benefit from contrasting visual cues.

On the right two buttons are placed to interact on the list of way-points without the need to use the smartphone. Further, a three stage turning dial to switch between the type of way-points. The settings are distinguished by three haptic shapes: triangle, rectangle and circle.

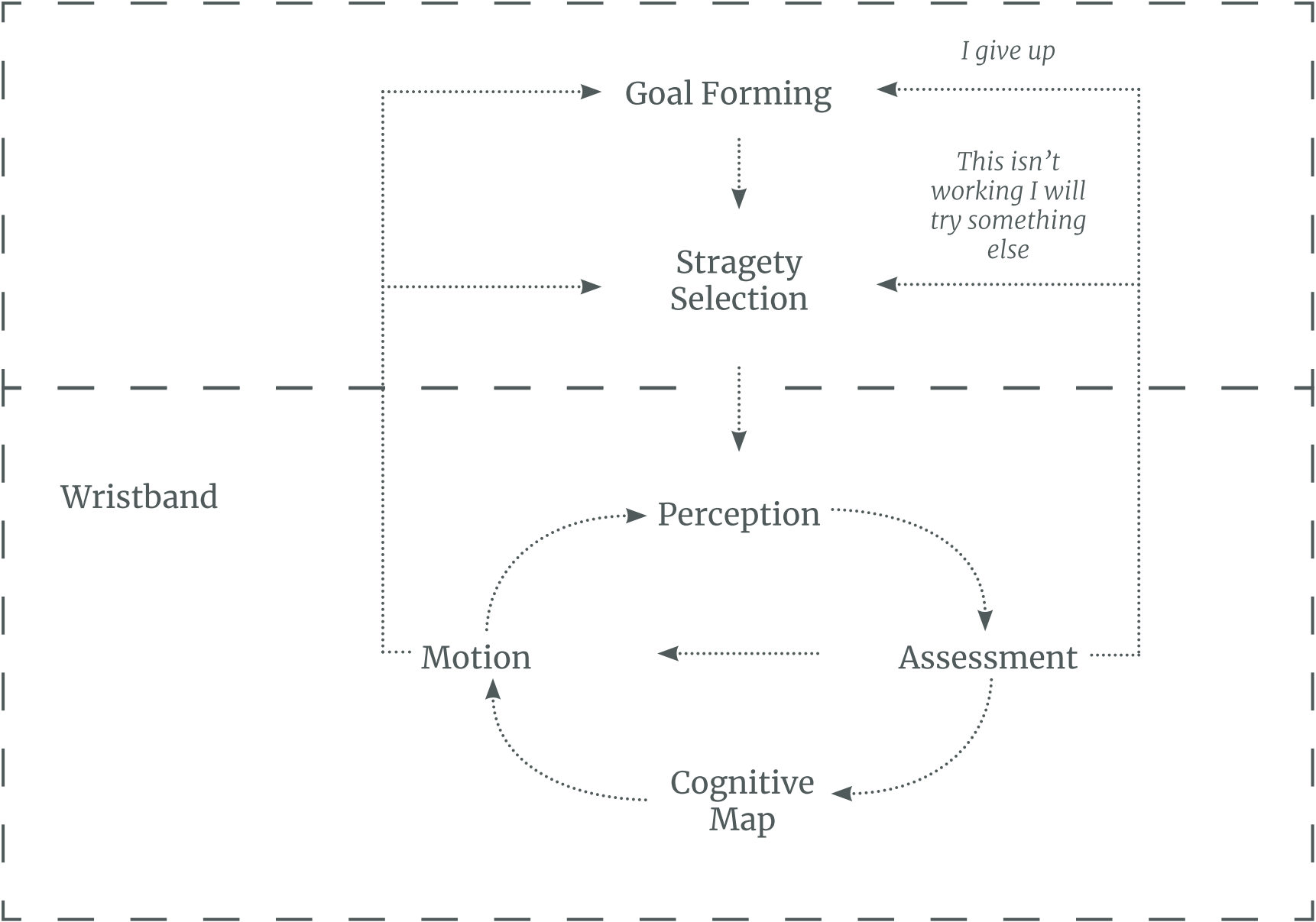

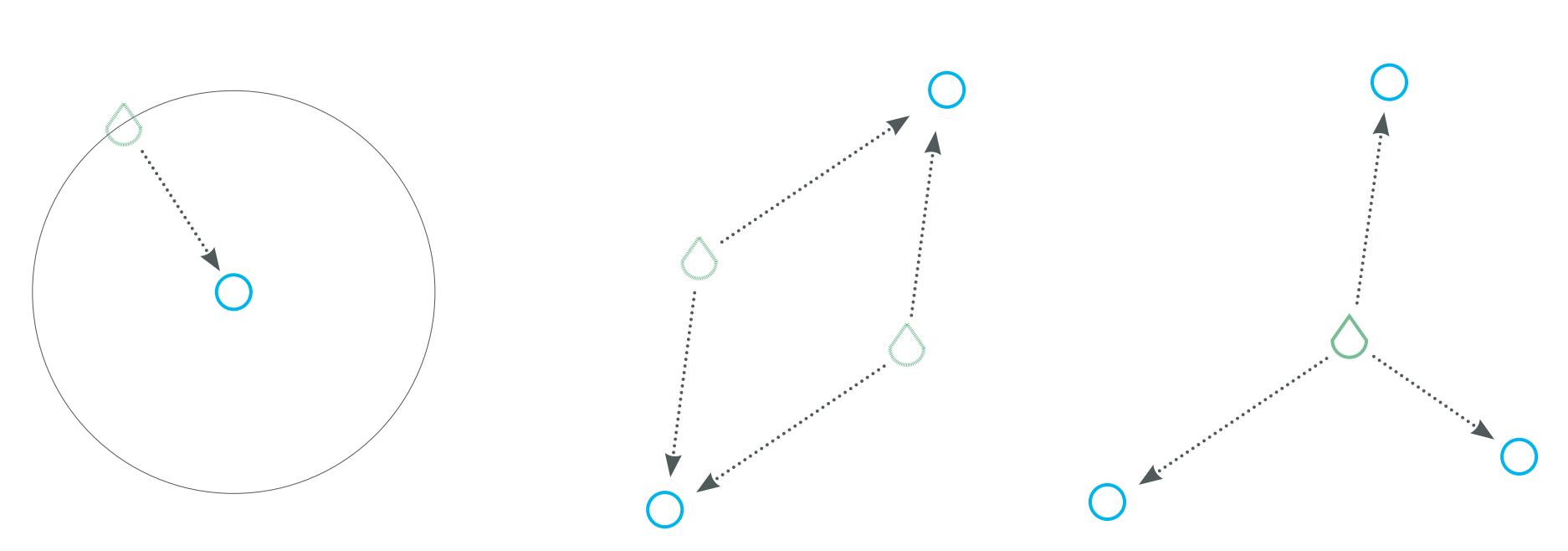

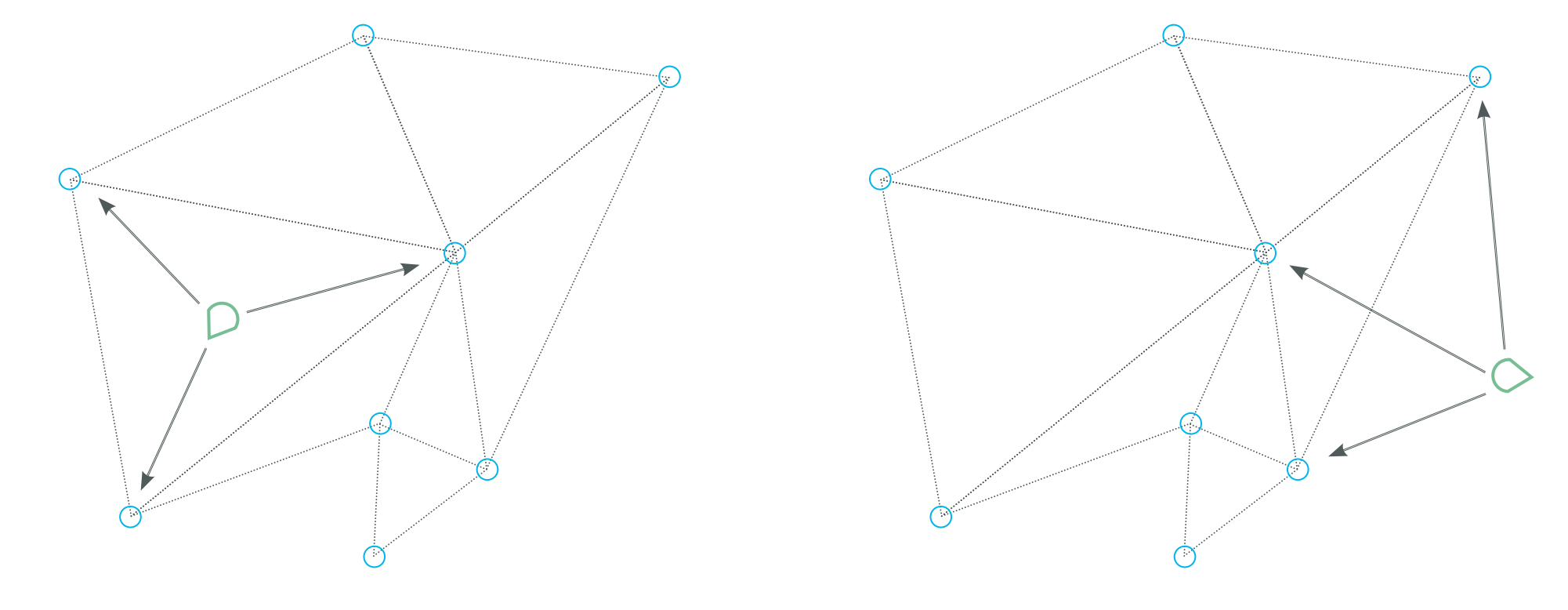

Underlying-principle

The principle of this design works on the same procedure GPS locates ones position: triangulation. as soon three points in space are known the location can be determined.

User tests revealed that this overview is not present and is beneficial for the orientation. The triangulation in the system is archived by saving waypoints that have meaning to the user and giving their location on demand.

Product-Context

Navigation consists of way finding and motion. It is the cognitive task of navigation and can be divided in four tasks:

- Orientating yourself in the environment is the phase, which leads to knowledge where you are located.

- To choose a route depends on distance to POI, constitution of paths, safeness, simplicity and the POI itself.

- To keep on track and ensuring that you are still on route.

- Recognizing that destination has been reached.